This is a pre-sales hardware related question:-

- I am planning on using 15 Samsung Evo SSD’s with the 3d printed caddy. Is the Corsair PSU included with the HL15 suitable to power all 15 SSDs?

- The SSDs run at 5v, whereas the HDDs at 12v. Is there any voltage conversion being done on the backplane itself?

- Can I create a ZFS pool with 2 vDEVs of 7 drives each?

- Will the PSU be able to power all 15 SSDs at the same time?

- Can I swap out the fans with quieter Noctua Fans? Would I also have to replace the CPU cooler?

Not sure I understand the comment about the SSD Caddy. Are you trying to run the 15 drives on the backplane PLUS an additional 15 SSDs?

The RM750e is rated for 20A on the 5V rail. So, 100W. A SATA SSD uses at most 5W under load, so 15 SATA SSDs should be no problem. NVMe SSDs can draw more power under load.

You can create a ZFS pool with 2 vDEVs of 7 drives each. Whether that is optimal for your use case I don’t know. The number of drives in a vdev doesn’t have to be a multiple of 2 or something like that.

There are many threads here you can read through about fan replacement. The problem IMO isn’t the fans per se, it is that they are wired to run at max RPM. You can keep the existing wiring and use fan resistor cables to bring down the RPMs, or you can rejigger the wiring to use the fan headers on the motherboard and respond to a fan curve with PWM or VDC control. The supplied CPU cooler is passive, so you do need to be sure there is enough airflow from the case fans to move air out of the case for your workload and buildout. If you’re not loading up the PCIe slots or upgrading the CPU you should be fine. If you want to upgrade the CPU to one of the ones with a higher TDP available for the socket, you would probably want to change to an active CPU cooler with fan(s).

1 Like

Just 15 drives in TOTAL.

I am looking for the following for my Home Lab:-

- A low power consumption system, particularly while running at idle.

- All SSD server. Thanks for the confirmation that the PSU will be able to handle all the SSDs at the same time.

- Low noise, as close to silent as it can be. Thanks for the detailed explanation regarding the fans. So essentially If i switch the CPU cooler to an active one, and swap out the fans or run them at lower RPM, it should be all good? Note: Not looking to upgrade the CPU, the included CPU is more than enough powerful for my use case, that is, a storage server running TrueNAS.

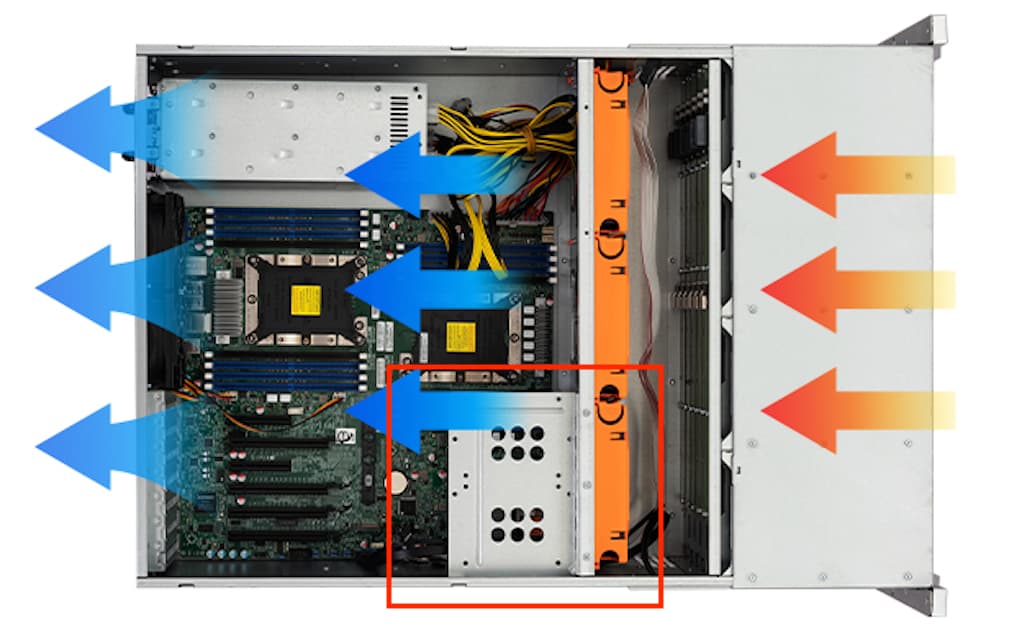

I was wondering, is there any additional attachment where I can put in a dual SSDs which would act as my RAID1 boot drive? Please find attached the picture for your reference.

1 Like

I made a post on the forum about using 2 SSDs using the spare DOM headers here Add 2 Extra SSDs - Hardware - 45HomeLab Forum

1 Like

The full build Xeon Bronze 3204 CPU only has a TDP of 85W. I think the people staying with the stock CPU are just swapping the case fans but keeping the passive cooler, unless they are maxing out the RAM or adding GPUs etc. I think the people swapping out the cooler for one of the tower coolers with or without fans are putting in CPUs with much higher TDP. Of course, it can’t hurt, but if your goal is low noise air cooling it might not be needed. I don’t know the idle power draw of the Cascade Lake Xeons, but I assume it is better than earlier Xeons.

I don’t have the full build. I purchased the chassis and put in a Ryzen 9 CPU & mobo so I’d have more headroom for VMs.

2 Likes

There’s some holes in the rear of the case for mounting a rear drive bracket, I don’t know if @Ashley-45Drives and the team are going to sell one, but they’re fairly easy to 3D print, I’m just finalizing a design for one to take 6x NVMe drives with 15mm height each for my HL15s!

Otherwise, as others noted, SATA DOMs are an option for boot drives.

2 Likes

- Can you pease share the cabling of the backplane, both power and data. How many power & data cables, with their respective connectors.

- I am assuming you are using an HBA, LSI 9207-8i or similar? Are you using an expander card as well?

- Are you using ProxMox, or some other OS?

I think that the 45HomeLab delivers a great value on the fully build system, considering the fact they are server grade, meant for 24x7 operations.

However, for home lab users, I guess we would enjoy more by building the system ourselves. Here is what I have in mind:-

- Purchase the Chasis, Backplane and PSU combo for USD 910. However, does this combo include the 6 case fans?

- B550 Motherboard - $120

- Ryzen 5600G, particularly because of the iGPU, and low power consumption - $142

- Noctua D15 CPU Cooler - $120

- DDR4 Ram, 64 GB - $105

- LSI SAS HBA 6gb/s, most probably 9207-8i, coupled with an expander card - $75 + $60.

- 10g NIC - $100 approx. Please recommend what NIC shall I go for.

Total comes to about = $910 + $120 + $142 + $120 + $105 + $75 + $60 + $100 = $1632

Not sure if the case fans are included, otherwise it would be an additional cost. I am thinking of Noctua 3000RPM fans, $28 x 6 = $168. For a grand total of $1800.

OS of choice: TrueNas Scale.

For IPMI cababilities, I am thinking of PiKVM. However, this would be later down the road… We can quickly see how the cost mounts up, and the fully built system offered by 45HomeLab seems to be more appealing at this stage.

Would love recommendations and opinions on the above build.

The ASUS Hyper M.2 x16 PCIe Expansion card is an option I am considering. It costs about $52, can be found on amazon, ASIN B07NQBQB6Z

This way I don’t have to worry about additional cabling, and be a much more elegant solution.

Power for the backplane is delivered via four 4-pin molex connectors. The data connectors are four SFF-8643 ports.

I purchased a used LSI 9300-16i for the HBA (which has SFF-8643 ports also), so that I’d have the simplest cabling and not have to deal with a second HBA or expander. I could have gone with a 92xx series solution, as I currently just have spinning SATA disks and don’t need 12 gbps, but will probably add SAS disks and SSDs in the future.

I’m running TrueNAS Scale with a few VMs on the HL15. I realize proxmox is more powerful for virtualization, but I’m more comfortable in TrueNAS right now and my use case was storage-first/vm-second. I’m learning proxmox on a different system.

The backplane and backplane w/ PSU chassis options do come with fans installed. Specifically CoolerGuys 120X120X25MM 3-Pin Medium Speed. You can review this thread for all about fans and fan options.

I’m not sure the Noctua D15 will fit in the case, at least not with 140mm fans. See here. Other than that your build seems fine to me. For 10g are you planning to use SFP+/DAC or RJ45 connectors and Cat6 cable? I think 10G networking has become pretty much commodity now. TrueNAS/Linux should support most NICs.

1 Like

Are you looking at this for some of the storage (instead of/in addition to the SATA SSDs), or for the boot drive(s)? I think to set that up using all four NVME drives, the motherboard needs to support PCIe bifurcation. In general, I’m not sure the B550 motherboards do. You may have to look at X570 motherboards. Or stay with your original plan of just buying the full build.

Similarly, if your plan is for that to be some really fast storage, you have to consider that the 5600G doesn’t have many PCIe lanes, so that might become a bottleneck if you’re expecting these NVME SSDs to give you sustained thousands of GB/s.

I’m not the expert here–there are other posts from people who’ve configured NVME RAID in the HL15. Just cautioning it might be something to look into a bit more depending on your use case.

Does the Corsair PSU have Molex Connectors?

For boot drive. I am thinking of making 2 vDEVs of 7 drives each, which will leave me with one open slot for boot drive.

The full build seems more and more appealing at this point.

The RM750e is fully modular. You can see the specs here. The list of cables included is at the bottom of the page, including a 4x 4-pin PATA cable. All the unused cables (which is most of them) are included in the box of extras for the HL15 variants with PSU. Note, though, that molex cable isn’t actually the one used in the full build. For the full build they have some custom cables so they can power the four connectors from different harnesses on the PSU and have better cable management. You can see this thread for more detail.

1 Like

Hey @SpringerSpaniel - thanks for the ping! I’m looking into this for you, and will report back ASAP.

Hello again, @SpringerSpaniel

I chatted with my colleague, Mark from R&D and he said that he could design a mounting bracket. In other words… this is doable.

That being said, I do not have a timeline for you yet or an update on store availability but please know that it’s on our list to explore. I’ll follow up again when progress has been made either way. Hope this helps.

1 Like

ASUS Hyper M.2 x16 PCIe comes in a PCIe 4 version with ASIN B084HMHGSP at 87 dollars

1 Like