I bought a complete system and want to run proxmox. From reading I am assuming I want to start over, install Proxmox to the boot drive. Then if I want to run Houston UI I would install that in a VM. I can not install it the other way (or at least it would be convoluted and backwards). Is this a correct assumption before I begin my journey? Thanks

HI @Goose, Yes you will need to reinstall the boot drive with proxmox but then you can simply install Houston UI to the Proxmox OS, being it is Debian our Ubuntu tools and packages will work with it.

You would just need to enable our repo.45drives.com and then install cockpit and our tools, this will need to be Proxmox 7 for it to work.

Here are the steps below:

make sure system is up to data

apt update -y && apt upgrade -y

next add our repo NOTE: this will fail on looking for lib-core package which is fine we are only using the script to add the repo

curl -LO https://repo.45drives.com/setup && bash setup

install Houston and other tabs

apt install cockpit

apt install cockpit-45drives-hardware cockpit-navigator cockpit-identities cockpit-zfs-manager

To Hide ‘rpool’ go to Configure Cockpit ZFS Manager and uncheck Display root storage pool

If zpool has already been created in proxmox drives in zpool status will not be aliased, to fix this you’ll navigate to

Datacenter → Storage → click pool → Edit → Uncheck Enable → OK

Export the pool in Houston, re-import using drive aliasing

zpool import $poolname -d /dev/disk/by-vdev

You can now navigate back to proxmox and enable the zfs pool

Awesome. Thank you!!!

Rather than the ZFS import could you also just pass the controllers directly to the Proxmox Houston VM? Or am I interpreting this wrong?

@Goose

I would ask to offer some information to save you from having to redeploy anything.

I have a Proxmox cluster of 3 nodes. There are going to be limits to what a proxmox ZFS pool can provide vs what HL-15 can provided hosting a ZFS Pool and NFS for file sharing.

Your post did not indicate if you are going to use this 1 server or have other servers.

The CPU in a fully built system has 6 Cores (or 12 threads for Proxmox to use). As I do not know what is your VM load will be, the CPU using less power and runs at a lower clock speed. For me this CPU would be underpowered. I was fortunate to find a few good servers from eBay seller UNIXSurplusCom. In fact two of my proxmox nodes were purchased from this seller.

To share what I experienced using Proxmox to manage the ZFS pool, I have a Proxmox node with 2 CPUs (total 12 Cores or 24 Threads), 768 GB RAM, and 8 SATA/SAS over an HBa. I enabled the Promox ZFS feature to manage the 8 drives configured for the vm guests. The other two Proxmox nodes cannot use this node’s ZFS storage. Also the RAM is going to be used by the ZFS cache which could hamper or compete with the virtual machine guests’ RAM usage.

Having the Proxmox services separate, all three can use the HL-15 to run ZFS. In fact that is how I am running my own HL-15. My HL-15 hosts all my ISOs, container templates, the proxmox backups, and have VM hard disks use the HL-15.

Hi @Glitch3dPenguin, yes you could do that but then the VM would be responsible for managing and handling the data and sharing of the data.

I believe the main question was could you have both Proxmox and Houston running on bare metal which you can? this way no VM layer is handling the storage and instead is the main OS itself.

either way would work to get VM and Houston managing the data.

@pcHome, You could also have ZFS on the 3 Proxmox systems with a replication task setup which copies the data from 1 system to the other so that in the event 1 system fails the VM the data can be migrated and started on another host. (Proxmox also has built-in ceph support so you could have a ceph/proxmox cluster also)

but yes I agree it is usually better to have your storage and your VM environments separate systems instead of colocating them.

Thank you for sharing that. Would that storage, technically, be classified as CEPH or can it still be called a ZFS pool? or it is more based upon how the Storage is defined the Datacenter - Storage - Add ZFS vs node - Disks - ZFS - Create ZFS? The Add ZFS access it by FQDN or ip address whereas Create ZFS would create it locally on the node itself.

I would want to try to see if I can use this as I have about 40 to 50 TB on two ZFS Pool within 2 of my Proxmox nodes.

FWIW I installed Proxmox with Ceph and use a VM for the file shares. I’m planning on having a 3 node Ceph cluster so this way my file sharing VM can float between hosts with HA. Instead of mounting RBD disks to my file server VM I decided to create a CephFS client in Ceph manually and mounted it in the file server VM so I could access the entire storage available. To manage the file shares I’m using Houston on top of Ubuntu 20.04.

Honestly nowadays I would go hyperconverged. Storage plus VM on the same machine, all SSD. Cluster a few together and VMs could even run on other nodes while keeping the storage on a different one (iWARP/RDMA).

Maybe a separate storage node with spinning disks for cold storage, backup etc.

But yeah. Not really a homelab scenario for most.

So, will Houston UI work with Proxmox 8 soon? I need proxmox 8 as drivers for the 7000 series Ryzen processors do not exist in kernel 5 on Proxmox 7

Houston does work on Proxmox 8. Proxmox is Deb-based so we simply use the focal packages on Proxmox 8 which allows Houston to work.

I just this this for a customer yesterday. Simply add our repo.45drives.com focal repo to proxmox then you should be able to install cockpit and all of our tools from it

apt install cockpit

apt install cockpit-45drives-hardware cockpit-navigator cockpit-identities cockpit-zfs-manager

If you want to use root to log in you will need to remove it from /etc/cockpit/disallowed-users once cockpit is installed

yep, got it all running, now if only there was a way to sync users with Active Directory that i could figure out… (on the Cockpit side with sharing and stuff, I’ve already set up proxmox with AD)

@Hutch-45Drives what about getting the X11SPH-nCTPF motherboard added as a supported platform to the 45Drives Motherboard tab? [Support for the 45HomeLab HL15 · Issue #24 · 45Drives/cockpit-hardware · GitHub] Is there a timeline on that?

I’m still working with the team on dalias to be able to work with proxmox/debian so I’m not sure if the repo I’m pulling from is the right one then if you are saying it works out of the box? I didn’t backup my /etc/vdev_id.conf file from the pre-installed system as I didn’t know I needed to. Not sure that would have helped going from rocky to proxmox anyway?

What about a link to an iso or disk image we can download to get back to the Out of Box experience? With Rocky and HoustonUI setup, etc.

Hi @Krushal, I believe the motherboard is supported in the testing version of the repo.

The system that worked “out of the box” was not an HL15 it was an enterprise server.

We haven’t figured out if we want to have a separate HL15 repo or have these packages on the enterprise repo yet so that is why they are not stable.

As for the factory image, I will talk to the team to see if we can get it uploaded somewhere for everyone to be able to pull it down.

You’re killing the profile picture game with the Santa hat placement. ![]()

![]()

![]()

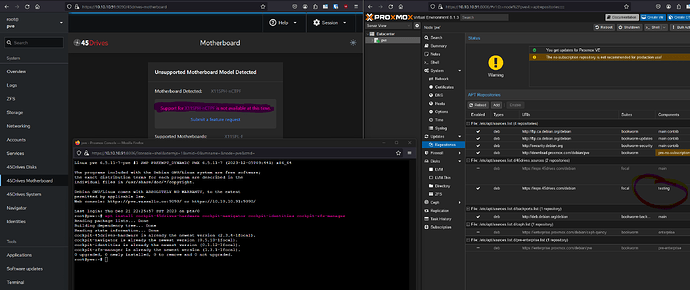

Hey @Hutch-45Drives I disabled the ‘main’ repository and just have the testing enabled, ran the install for the cockpit-45drives-hardware, etc (nothing updated), and the 45Drives Motherboard is still showing not supported.

Checking Github branches: Master, Build, and Dev I cannot find the X11SPH-nCTPF motherboard in list, but not sure if I’m looking in the right place. I assume the test repo pulls from one of those branches?

Hi @Krushal, I will need to check with the team and get back to you with that as the HL15 motherboard is a new motherboard we only use in these systems so I’m not sure if we added the support to the repo yet.

Hey @Hutch-45Drives any update on this? This is my last issue I’m aware of on getting the 45Drives items working on Proxmox ![]() , mhooper was great and got the dalias items figured out, at least manually for now - but I provided screen shots to maybe have that automated in the future).

, mhooper was great and got the dalias items figured out, at least manually for now - but I provided screen shots to maybe have that automated in the future).

I could swear the 45Drives Motherboard tab worked on the Rocky Linux install I received before I wiped it and loaded up Proxmox. Maybe that’s just misremembering but maybe there is a special config/file that I just need to manual put on the server for now to get it to work?

I did not see the motherboard tab on my prebuilt HL-15. I even check the backup I made of my NVMe (prior to any changes I made to my original system).

There is an issue reported on their GitHub repo. I can share the link for you to monitor its resolution.

Hopefully this link can give you an alternate way to check for any updates.

Amending to my original post at 2024-01-04T00:07:00Z

As I was looking at the Github repo for the cockpit-hardware, there are references to the X11SPH-nCTPF mothboard.

- On the 4th of October 2023 markdhooper (on github) committed a change that added HL15 within 1 of the case statements. I am assuming he is the same @Hutch-45Drives.

- 45Drives Disk has the correct image for the 15 port backplane

- 45Drives System has the correct image of the chasis. The system, PCI, CPU, Network, and IPMI are correctly shown via the various linux commands.

The “45Drives Motherboard” feature does not have a specific images/assets to load which causes an error. I have not tried to debug any further. The feature is similar to the disks feature which allows you to check within the graphic to see more detail.

In my opinion you are not really missing anything major because the “45Drives System” has full summary. All detail displayed matches to the linux bash command to get similar detail.

Hope this gives you more confidence in trusting the detail within cockpit for the HL-15.