Hey everyone!

I am assuming anyone here is coming straight from my newly released video “45HomeLab - Doubling the Transfer Speeds of the HL15”. If you haven’t checked it out, make sure to head here to watch so you can have the full context on this thread!

Alright, so let’s get to it. In my video I threw down a challenge for all you 45 homelabbers out there! I had some fun getting SMB multichannel configured on the HL15 to make use of that second 10Gbit connection on the server, and was able to hit some really great speeds.

I spent about 20 minutes tweaking a few parameters to try to get a really great speed, but I made sure to leave room for some more tuning to ensure that there is a lot more to get out of it……

So, with that in mind – I am challenging ya’ll to beat my performance with your HL15. The winner will get some really great 45Drives swag shipped out to them!

There are specific criteria to be eligible to enter this challenge for the official prizes, but even if you don’t meet all criteria, don’t worry and we encourage you to take part as well! Also, if you don’t have everything needed to take part in this challenge don’t fret – there will be lots of opportunities to take part and win some cool stuff!

Here are the rules and the parameters to be eligible to receive the winners award and 45Drives swag:

-

The server used in the challenge must be a HL15 chassis

- The electronics are your choice. All CPUs, RAM and motherboards are accepted.

-

The server must be running 10Gb/s

- The challenge here comes from making use of multiple 10Gb connections to deliver over the 10Gb/s performance limit of a single interface

- SMB-multichannel OR bonding are both acceptable ways to achieve your numbers

-

The storage that will be used for the tests must be 15 HDD’s or less.

- There shall be no use of Dual actuator HDD’s (mach2 etc.)

- There shall be no use of NVMe or SSD in form of caching (bcache, OpenCAS, LVM cache, etc.)

-

ZFS must be used for the file system

- There shall be no use of helper VDEVs such as SLOG, L2ARC, Special VDEV

- All VDEV arrangements are valid – including simple. (In the video, we used RAIDZ2) Bonus points if you can achieve or beat my performance with some form of RAID protection

-

The benchmarks must be run on a client running a SMB share from the ZFS pool made up of the 15 HDD’s or less

- Test 1 – CrystalDiskMark using 32 queue depth single thread with 1M block size and a 1GB file size (instructions will be below) and record performance with screenshots

- Test 2 – Create a 10GB file (instructions will be below)

copy the 10GB file FROM your clients’ local drive TO the HL15 ZFS SMB share and record performance with screenshots

copy the 10GB file TO your clients’ local drive FROM the ZFS SMB share and record performance with screenshots

-

Include all tuning parameters used to achieve the result

- For bonus points: Include the before tuning numbers and the after tuning numbers.

How to add a submission to the challenge:

Once you have something ready to submit, take screenshots of all tests in progress showing the performance numbers.

We have a bash script created just for this challenge you can find here: https://scripts.45homelab.com/perf-challenge.sh

Note: If you do not meet all of the requirements for the rules listed above, this script may fail on some of the steps, but it should still complete and create an output file.

Follow these instructions to pull it down:

Open up your HL15’s terminal and make sure you are logged in as root.

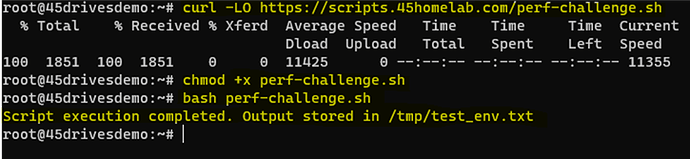

From the terminal run: curl -LO https://scripts.45homelab.com/perf-challenge.sh

Next, make it executable: chmod +x perf-challenge.sh

Finally, run the script: bash perf-challenge.sh

It will output a file to /tmp/test_env.txt

Gather up your screenshots and the test_env.txt file and we will follow up on this post with a place for you to upload them.

CrystalDiskMark instructions:

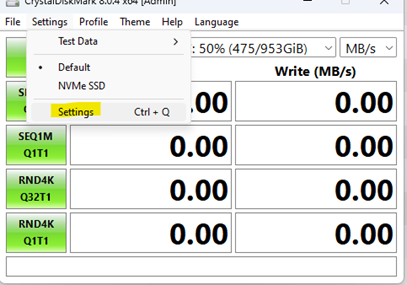

You can download a clean copy of CrystalDiskMark from guru3d here: Crystal DiskMark 8.0.4 Download

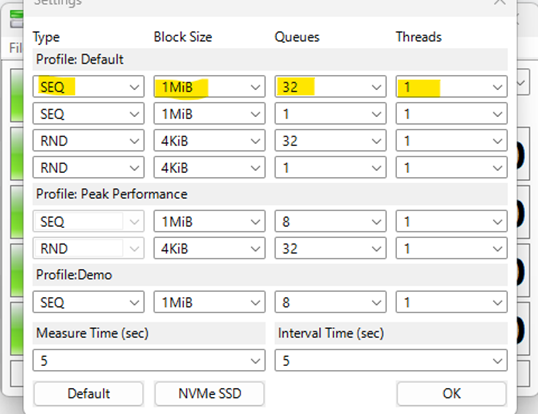

Once downloaded and installed, open CrystalDiskmark64.exe. By default the first test should be very close to the proposed test. It is most likely set to SEQ1M Q8T1 and we want to set it to SEQ1M Q32T1. We do this by Going to the settings header and then Clicking Settings on the dropdown.

Next, change the queues of test 1 to 32 and make sure it matches the image below:

Click OK.

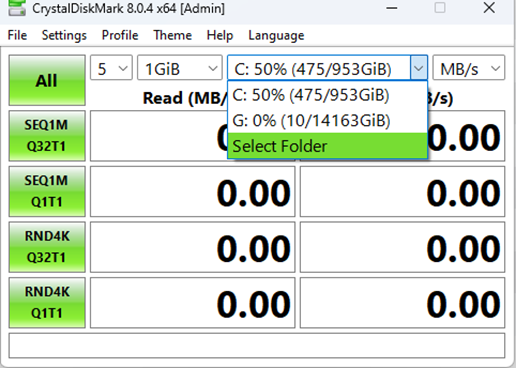

From here, you must select your network attached SMB drive as the target. By default, it will be pointed to the C:\ drive. Click the drop-down menu and click “select folder”

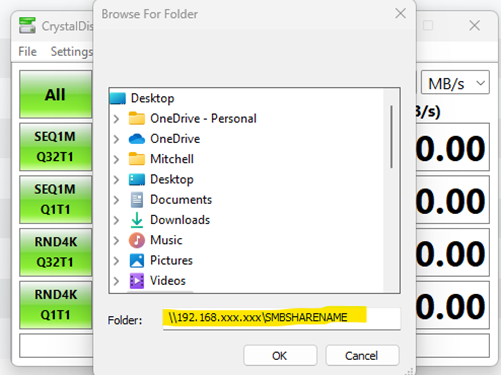

Choose the full path to the SMB share.

You are now ready to run the first test. There is no need to run “ALL” for the purposes of this challenge. We are simply looking for the results from the first SEQ1M Q32T1 test.

10GiB File creation on Windows:

NOTE: If you want to achieve GB/s or more speed to be able to show the full performance of your HL15 array you will need to have a local NVMe on your workstation. If you do not have a local NVMe, you can instead create a temporary RAM disk using this tool: ImDisk Toolkit download | SourceForge.net The ramdisk will act as a great substitute temporary drive to copy the file from to the HL15 SMB share, and then back to from the HL15 SMB share.

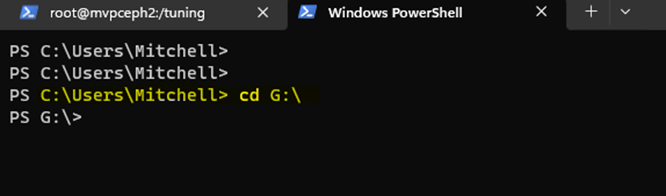

Open Windows terminal or CMD if it is not installed.

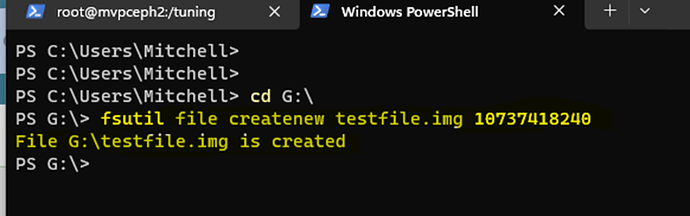

“cd” into the local directory you wish to put the new file into. In my example below I’m using the G:\ drive:

Next, we create our 10G file:

fsutil file createnew testfile.img 10737418240

This will instantly create the file in the directory you are sitting in, and now we can use this file for our transfer to and from our HL15 SMB share.

So, there we have it! The gauntlet has been thrown down and I can’t wait to see what everyone comes up with. Good luck everyone. I encourage everyone taking part to talk about the parameters and tunings you are trying out! I will definitely monitor and help out with some questions ![]()