It was time to replace an aging Dell T430 server that was slowing dying so the decision was made to build my own rather that just buy another branded server or NAS or just cobble old parts together and call it a server. My experience in the past with buying servers has been fine but being that I’ve been building my own custom gaming PCs since the 1990’s (!), I figured that it was time to build a proper server from the ground up.

Some requirements I had for the new server: a powerful CPU, ECC memory, lots of drive bays, PCI bifurcation, and be rack mountable. The only real choice for case for this build had to be a 45homelab chassis so I decided on the HL15 so I could pack it with drives.

The old system has been happily running Unraid for years but the new one is running Truenas, thus the ECC ram.

It’s a pretty powerful system as it will also host a few high power virtual machines in addition to becoming my docker server as I migrate off of the old servers.

The hardware I settled on:

HL15 chassis (obviously!)

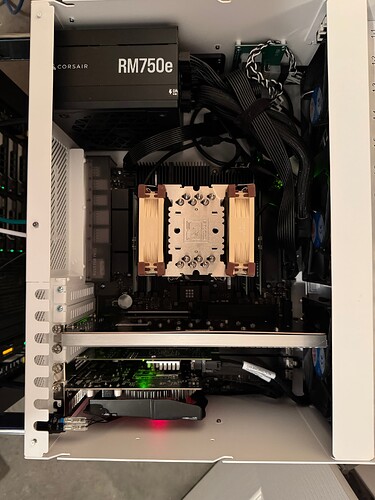

Xeon W-2455x processor

Noctua fan

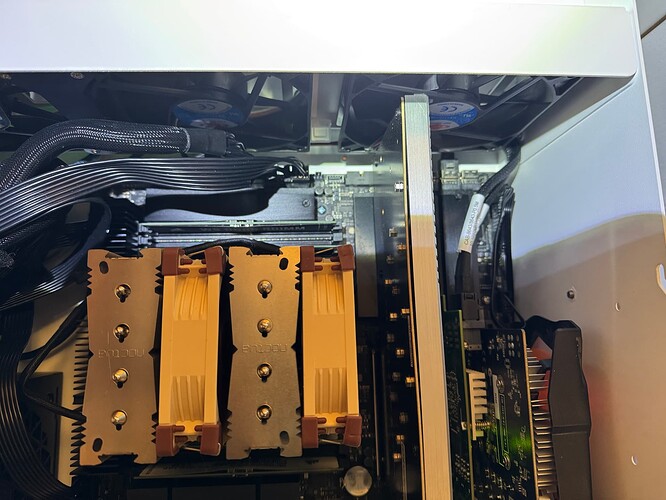

128Gb Corsair DDR5 ECC ram @5600

Asus Pro WS W790-ACE motherboard (with SATA and miniSAS supporting eight drives on the backplane) housing 2x256GB nvme drives mirrored for the OS.

LSI SAS3008 HBA - attached to two of the backplane cables with four drives each.

Asus Hyper M.2 PCIe card housing 2x512GB and 2x2TB nvme drives for caching and a fast ZFS mirror for local VMs and proxmox VMs.

Corsair RM750e power supply.

GTX750 GPU for graphics

Funnily enough, I was able to fit an old RTX 2080TI I have kicking around into the chassis but it covered over too many of the PCIe slots being that it’s a very large GPU card and I needed them.

The hard drives are a mix of old and new from the old servers and are arranged in two ZFS pools; two raidz1 vdevs each with four 8TB Ironwolf drives in one pool, and one raidz1 vdev with four 16TB Ironwolf drives in a second pool. Total capacity is just shy of 80TB.

In addition there are two SATA SSDs being used for an application pool for docker containers as well as hosting some user home folders.

To note, the motherboard is slightly larger than an E-ATX but it fits fine. I did have to remove the fan assembly to get it put together but that wasn’t a problem. No cables are being crammed or bent in a fashion that will cause any damage.

Yeah, it’s probably overkill but I expect a lot of years out of this hardware and it’s going to get pushed to the limit.

The keen eyed will notice the fans on the CPU cooler are not blowing front to back. I don’t know whether ASUS or Noctua are to blame but the socket is oriented up/down and that’s how it all fits together. In any case, I was going to replace it but after a couple of weeks of heavy use of the CPU, temps have never risen above 45 degrees so I’m not going to worry about it.

Now the data transfer process is underway as I shift everything over to the new server.

It’s been a fun build and I’ve had to learn way too much about cabling systems with large amounts of disks, hahaha.