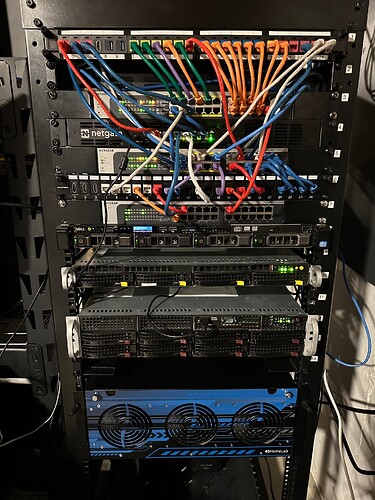

This is a quick post to show my HL-15 installed within my rack.

Just as Jeff Greling has a shirt stating that he cosplay as a sysadmin, I am one that does not take the time to cable manage.

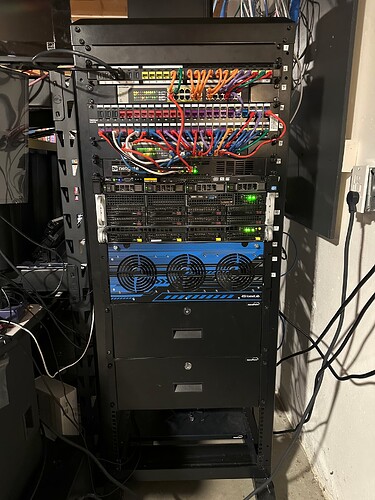

After the ZFS data was moved to the HL-15, I updated the photo on 2023-12-01T00:15:00Z. I replaced many of the Ethernet cables with shorter (6") cables.

This rack was something I started during COVID (March 2020). Today the rack is almost full.

This coming Thanksgiving holiday, I am hoping to “tame” the cabling.

Current the rack hosts:

- Keystone jack with 24 ports supporting Ethernet, HDMI, and USB ports

- Netgear unmanaged 24-port switch

- Negate SG-4860 - the 1U rack max model. The memory on this model is 8 GB.

- Netgear unmanaged 24-port switch

- Keystone jack with 24 Ethernet ports

- Netgear unmanaged 24-port switch

- Dell R420 384 GB RAM with 4 Drives using a Dell RAID card. 2 Socket yielding 16 total cores (32 cores for Virtualization)

- Supermicro X9DRW-7/iTPF 1 TB RAM with 4 total drives running a ZFS RAIDz2. The chasis is a supermicro (I don’t have the model number). 2 sockets yielding 16 cores (32 cores for Virtualization)

- Supermicro X9DRi-LN4+/X9DR3-LN4+ 786 GB RAM with 8 Drives running a ZFS RAIDz3. The chasis is a supermicro (I don’t have the model number). 2 sockets yielding 16 cores (32 cores for Virtualization)

* Chenboro unit running TrueNas with 10 drives ZFS (wtih 2 address drives for Vdev) and a separate ssd drive to boot. This unit does not have a lot of memory or a powerful CPU as it is just a storage unit. - HL-15 - I picked the stock model (16 GB RAM with SFP+ ports). I have to installed the 4 slot NVMe Carrier Card

On the other side of the rack I have two Mikrotik switches creating my 10GBe backbone for the servers. Those models are CRS309-1G-8S+IN and CRS305-1G-45-IN. As you read this post, you might notice a number of switches/routers. I use the unmanaged switches to separate the network traffic.

Functionality there is a Proxmox 3-node cluster compromised of the Dell R420 and the 2 Supermicro units. The cluster is connected to a TrueNas as a storage target (for ISOs, Backups, and running VMs). The cluster provides 88 CPU threads, 2 TB of RAM, and 68.58 TiB.

Not seen in the photo are the couple dozens Raspberry Pi 3b, 4, 400, and the two piKVM connected to them as well as a Home Assistant Yellow.

There are items I have not decided to add to the rack or recycle. Comparing these units to the existing rack units, these units draws more power without yielding much computation power

- Apple X-Serve the 1U unit which supports 96GB RAM and 3 SAS (or SATA drives).

- Dell SC24-SC - the 1U unit were mostly deployed as Facebook servers in the early 2000s. I have two unites with each supporting 2 CPUs (4 cores) and 48 GB RAM (as the max).

- Netgate XG-7100 - the 1U unit needs to have a power supply replaced. It supports 24 GB RAM support both early version of NVMe. It was the model that required extra configuration to create discrete network devices.

- Dell Mid-Tower unit circa 2008 - the unit looks like a desktop and supports 32 GB RAM. The Intel CPU is either 2 cores or 4 cores.

2023-11-16T16:31:00Z - I received an generic set of INTEL SFP+ cables. The stock cables from FS.com are not that expensive (I paid $11 USD). I do not mind the shipping costs.

This stock cable (with INTEL tranmitter ends) does work with MikroTik switches too. I generally pay extra for the cables to have transmitters specific for the network card/switch-router chipset (and a longer length).

2023-11-30T16:56:00Z Updated my HL-15 with the 12 Drives from my TrueNAS server.

Updated the photo as I purchase/updated the cabling with 6" Ethernet cables.