Specter

February 27, 2024, 10:19pm

1

Yes it’s an incredibly dumb question.

It was late when I got Houston up and running - grabbing the wrong OS install media can waste a lot of time. Though I could swear that I set my pool to use the 3x 18TB drives in RaidZ1, I’m looking at the numbers net to a ZFS pool calculator and can’t tell if it created the pool in RaidZ1.

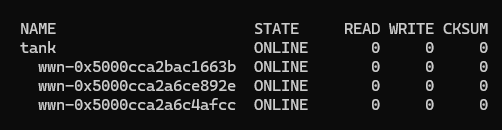

“zpool list -v tank” & “zpool history -i tank” did not give me any answers.

The ZFS capacity calculator says (ZFS Capacity Calculator - WintelGuy.com ) says that I should have…

49TB of storage capacity,

54TB raw,

31.6TB usable, and

28.4TB usable after the 10% refreshervation and slop space allocaiton.

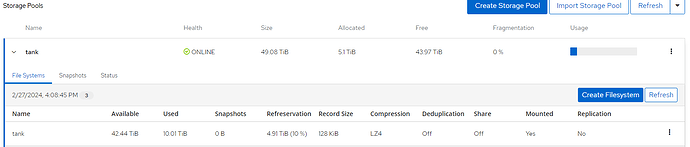

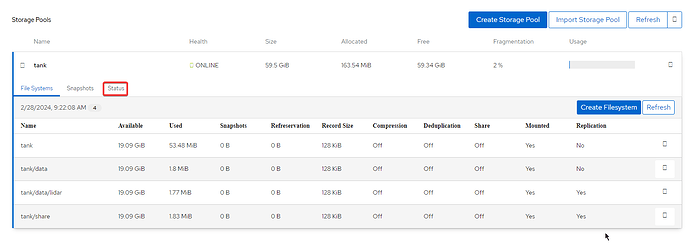

Houston shows that pool “tank” has the following values

Is this RaidZ1 or did I misclick?

Thanks in advance for helping a silly person who really does not want to re-transfer 10TB of data

Hi @Specter ,

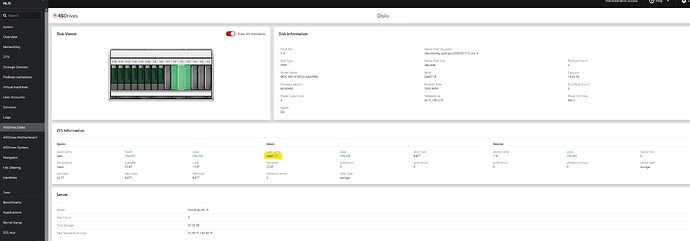

It appears you might not have a full build, so not sure if the 45drives disks module is available to you.

Specter

February 27, 2024, 11:11pm

3

That is correct - hacking that plugin to work with my HBA (9300 16i) is at the top of the stack… but I don’t know where to begin yet.

Specter continues to regret not getting the full build.

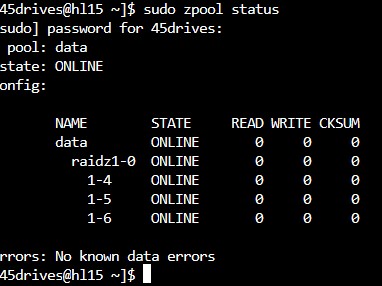

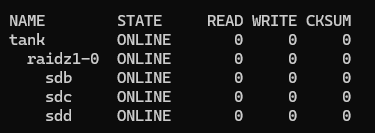

Here’s the zpool metadata…

capacity operations bandwidth ---- errors ----

description used avail read write read write read write cksum

Not a problem. just drop into a command line and run “zpool status”

1 Like

You can also run a “zpool history”. that will show you what options were run when you created the pool.

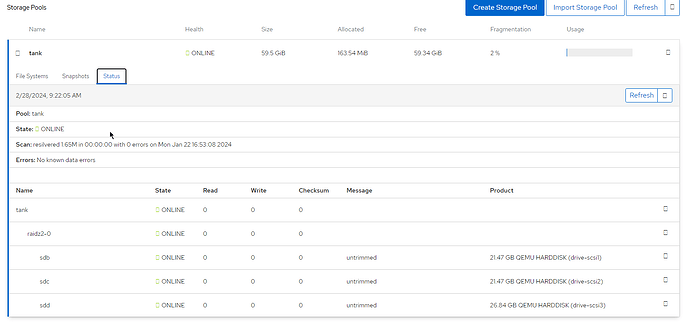

@Specter , You can see your pool status by going to the ZFS tab and pressing the status tab

Looking at the below image you uploaded it looks like you simply have 3 drives in a pool with no RAID so these would simply be stripped together. i highly recommend deleting and recreating the pool with a RAIDZ1 for 1 drive of parity at least.

Specter:

Specter

February 28, 2024, 9:07pm

8

Was able to confirm that it was wrong.

Changed a few settings, but I rebuilt it and

3 Likes