Many thanks for the advice and for taking the time to share it. Unfortunately, adding a second box isn’t possible right now.

This is not a sponsored project; I’m building it with my own funds for both personal and work use. I’m the sort of person who monitors hardware and storage solutions for a long time, then acquires them once I find a deal that’s overwhelmingly in my favor. Over the years, I’ve collected several high-end SSDs and Optane drives at very good prices.

Server Purposes:

- Plex.

- Storing personal photos, documents, etc.

- Acting as a Git server.

- Serving as a backup for my workstation (I use some 15 TB Kioxia drives in RAID 1 for work).

- Backing up phone photos and data.

- Providing shared storage for my workstation, laptops, TV, etc.

- Storing test traces from RV-Cores simulations.

- Storing layouts / synthesized netlists.

- Housing additional data generated from my work.

I’m calling this build “paranoid” because a second backup server isn’t something I can manage right now. Even this one is more than I’d like, since it will generate noise in my room—and I really need a quiet environment to focus.

Therefore, reliability is the most important feature. I need to ensure that I don’t lose data. I’m willing to sacrifice some storage capacity by using three Z3 vdevs. I’d rather purchase larger drives to offset capacity than compromise on reliability.

I also plan to use LAG because I don’t need more than 10GbE per client. As for overall storage, I don’t need a huge amount. I’d be fine with around 72 TB for a few years. Currently, I generate roughly 1–3 TB of data every 2–3 months, so if I go with 12 TB drives in three Z3 vdevs, that should cover my needs for quite some time.

I don’t need the 4x 10 GbE LAG to be at full capacity all the time. The workflow involves large bursts of data—ranging from a few tens of gigabytes up to around 200 GB(almost never)—after which activity subsides for several minutes.

Most of the time it is many many small random files.

Main Concern: Accelerating the Pool

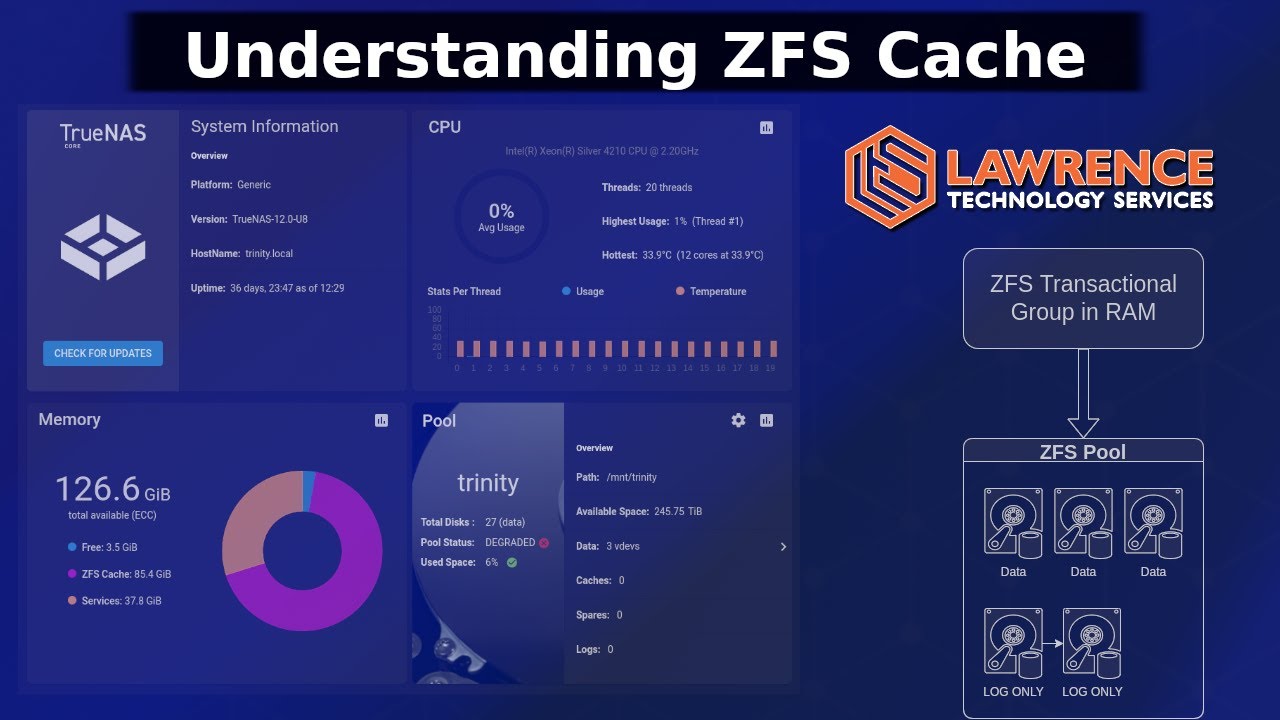

L2ARC (Read Cache): I’ve watched multiple videos suggesting it’s better to add more RAM than to rely on an L2ARC. I’d love to see some test data on this—if anyone can share, that would be very helpful. My understanding is that data stays in the ARC until there’s no more available ARC space, at which point it’s replaced by new read data. (ARC is fast)

Write Performance: I’m more concerned about write performance. Regarding a ZIL, my thought was that a fast ZIL device would improve write performance because once data is written to the ZIL, it’s considered safe, freeing ZFS to handle subsequent writes in RAM. I’m not entirely sure how to resolve any bottlenecks here; I do not 100% understand how writes works.

Drive Choice I plan on consulting Backblaze’s AFR stats and picking drives with power-loss protection (PLP), a low failure rate, a SATA interface, single actuator, and maybe non-Helium if possible.

Thanks again for your time. If anyone can share test data on different setups or point me to resources showing how to calculate expected write performance, I would really appreciate it.