Given the replies that @rymandle05 and @DigitalGarden provided, I was thinking that RAM would be an issue for you. I wanted to ask if you thought about this issue when designing your lab? Both

ZFS and and Virtualization on the same server are wanting to compete for the RAM usage.

While I don’t have any specific views with Proxmox or TrueNas scale, RAM will be a shared resource.

Proxmox or any another host of virtualization wants to provide as much virtualization capacity using as much RAM available on the server.

ZFS wants to use as much RAM as possible to cache the most frequently used files.

Given what you are wanting to achieve, I believe you are going to reach a limit or a ceiling with 128 GB RAM.

I believe either on this forum or Lawrence Systems or Level1Tech, an article citing how ZFS likes to use at least 50% of the RAM for its cache by default.

For my HL-15, I decided the HL-15 will take over the NAS duties from TrueNas. (I have post sharing my lab detail and it has my previous TrueNas hardware. ) As My virtualization servers are separated, the default build (default hardware bronze CPU) from 45homelab meet my needs with Rocky Linux as the OS and Cockpit, ZFS, and other software modules. I had to increased the RAM to reach its max configuration (8 x 64 GB DDR4 RAM or 512 GB). As my 3 Proxmox nodes connect to the HL-15 via the 10Gbe connection for backups and archives, the HL-15’s RAM usage averages near 270 GB (24x7). There are times the RAM will peak higher and lower, but the average is 270 GB (in the last 16 days).

My concern for you is ZFS could take up 64 GB of your RAM, but the Virtualization guests will want to use that RAM as well for its services or you will be limited to not provision anymore guests.

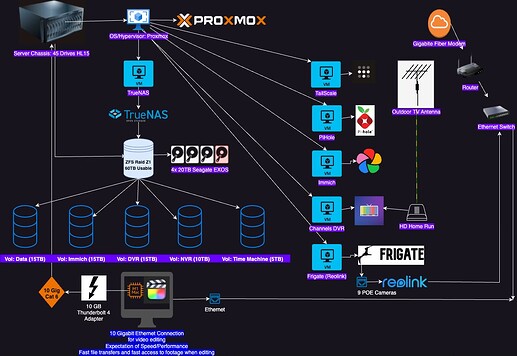

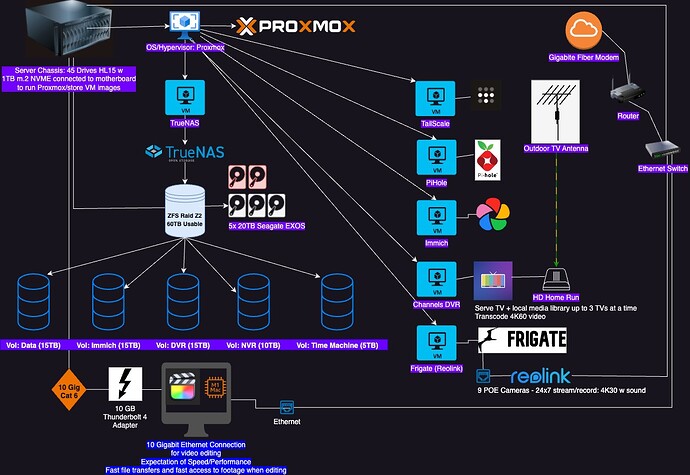

As I reviewed your second image of your homelab (which is a great image), I am estimating that Channels DVR and Frigate are going to consume a good portion of the HL-15 resources.

The other concern is you TailScale/PiHole could impact your home network during any HL-15 maintenance window which causes a reboot, etc.

I use TailScale and PIHole, but not within virtual guest:

- TailScale is configured on my Negate/pfSense hardware

- PiHole is running on a raspberry Pi (pi4), but many of the features in the application offers can be duplicated within a pfSense software package (pfBlockNG).

These are minor improvements (to make them independent to your virtualization solution).