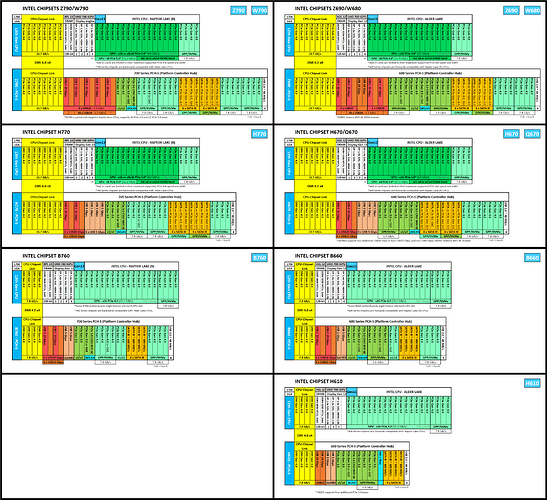

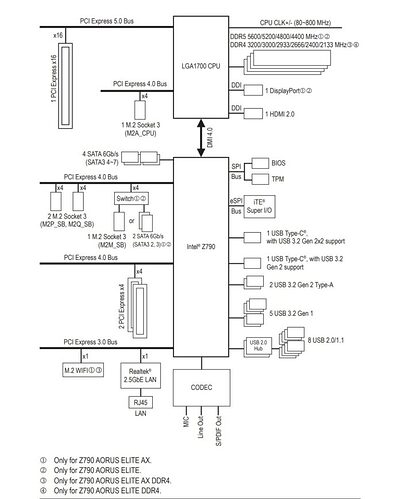

Some motherboard manufacturers include a block diagram in the user manual, I didn’t see one in the manual for your motherboard, but here is one for a Gigabyte Z790 board. All Z790s are going to look somewhat similar. This is an alternate way to view the connectivity info I attached above;

(One difference with your board is it would show the one PCIe x16 slot connected to the CPU as optionally being bifurcated as x8x8 with your second physical x16 slot.)

The point is, yes the CPU directly supports 16x PCIe 5 lanes and 4x PCIe 4 lanes, but the latter is wired to one of the NVMe slots. That NVMe slot always has those four lanes allocated to it whether there is an SSD in the slot or not, and it doesn’t share that bandwidth with anything. Asus could have done something like provided another x4 PCIe slot on the board, and said “If you populate slot PCIE4_X4_CPU, M2A_CPU will be disabled” but they didn’t. Most everyone wants their boot drive to have a fast direct CPU connection. the only mutex configuration I see in glancing at the ASUS manual is that “M.2_4 slot shares bandwidth with SATA6G_5-8. SATA6G_5-8 will be suspended once either a SATA or NVMe device is detected at M.2_4”. IE, if you populate M.2_4, you can’t use four of the SATA ports on the motherboard. The PCIe lanes aren’t some sort of global resource that are allocated and freed as you plug and unplug things, the usage of the lanes is largely determined at design time with few exceptions like a few bifurcation options or the SATA ports or fourth M.2 slot usage mentioned above. Hopefully that makes sense.

Sorry, you would look for “-16i” cards, ie 9305-16i that would have a PCIe 3 edge connector. There is a 9201-16i, but that is also PCIe 2 (but x8) so may only be marginally Better than the IO Crest card. I suspect the IO Crest card also is an expander type and multiplexes two of the SFF-8087 ports together (but I could be wrong). I mean, if it works, that’s fine. In theory the PCIe 2 x4 edge connector should have a 2 GT/s bandwidth which should support about 15 spinning rust drives, A 9305-16i will probably run hotter and use more power. It also uses the newer SFF-8643 connectors like on the backplane, so you would need a different cable set.

Well it’s hard to know without knowing anything about the use case. Maybe others can chime in. Why do you want to switch to Proxmox with TN virtualized? How many/what sort of containers and/or VMs do you want to run? Have you monitored the main dashboard in TN and looked at the Netdata reporting to get a feel for how the system is currently performing?

I have a general impression that Ryzen CPUs are more power efficient than Intel, but I’ve also seen videos disputing that. I would question whether the i9-13900 not overclocked is really terribly more power hungry than other CPUs if configured correctly. Of course if you are overclocking then it will draw a lot more power, that’s the way it works. If your current setup basically works you can wait and see what the 15th gen Intels have to offer. If performance/watt is really a “main goal” than you can work down Passmark’s CPU Power Performance ranking;

https://www.cpubenchmark.net/power_performance.html

Unfortunately, I don’t think you can limit it by socket or other criteria besides Intel/AMD, so you kind of have to know a bit about the naming schemes to get something in the same ballpark, but for example you could keep your motherboard and get an i9-14900T which has about twice the performance/watt than an i9-13900. I don’t think K SKUs are on the list because they are overclockers. This wouldn’t be “keeping the power you have” though. The Passmark score would drop to about 40K vs about 60K for your 13900K. It’s not like you have a Xeon or AMD chip from 12 years ago. You’d have to analyze what the cost to buy a new CPU and sell the old one is and what the cost savings in electricity really is. If you live in California or Europe, maybe it’s worth it. If you live in Canada or many other parts of the US, probably not.