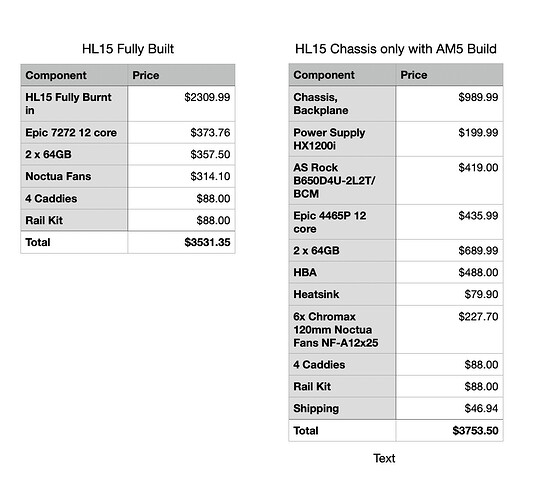

Below is a comparison of the two builds I am considering. This is strictly a storage server that will complement my Mac studio for Video and Photography work and a separate (yet to be built) compute machine.

Am I missing anything on the build to actually make the price an apples to apples comparison?

Do I need a separate thermal paste or anything else to do the build itself?

I have all these components in my cart in New Egg except for the ones i plan to get from 45homelab.

Is the build worth the additional $222.2? I am getting DDR5, some additional speed and hopefully better thermals / lower noise and lower power bill. Anything else I am missing?

That seems like a decent build to me. I have a couple of AM5/B650 builds. As long as your definition of “strictly a storage server” doesn’t include any sort of media streaming that requires transcoding.

The main things that the Full/SP3 Build give you is more PCIe lanes and potentially more RAM.

A few things to note;

- you only have one m.2 NVMe on the motherboard. You could put another in the 4x PCIe slot. But this may limit your options for redundant boot drives and/or high speed cache for your video processing if those things are important to you.

- you don’t mention how many HDDs you plan to have initially or in the future, but there are discussion in the forum here about how the backplane is powered (indirectly) by four molex connectors from the PSU, and why you shouldn’t just use one 4x molex cable from the PSU. Since you will have a Corsair PSU compatible (I think) with the pinout of the one used in the full build, I would either special request (info@45homelab.com after placing your order) if they could add a set of the short custom PSU molex connectors they use to your build for a small fee. Or, order a custom set from Cablemod.

- You might just want to confirm the PSU length of the HX1200 will fit in the case. Not saying it won’t, too lazy to confirm. But the longer PSUs may bump into the power distribution board or make it hard to work with the connectors on it. 1200W seems way overkill for your custom build, even if iti is on sale. Since your build isn’t going to support the same level of compute and PCIe expansion (eg, GPU) that the full build is, you don’t need to match that apples-for-apples. Why not get the RM850e instead?

You’ll probably need thermal paste but it depends on your cooler. It probably doesn’t come pre-pasted, but it might. I think the only tool you’ll need is a #2 Philips, but maybe also the size smaller. A stubby one may not work for the PCIe slot brackets. Plug in the PSU and ground yourself on it occasionally. And be sure to order the correct cable set with the HL15 for the HBA you choose.

I am in a build loop! Help me out!

What I absolutely want are:

Enough storage slots. Have 6 new drives are already in hand and have at least 6 older 2TB HDDs that i will drop in to have two vdevs to help with redundancy.

I want ECC - Please don’t try to talk me out of that as this will be a TRUENAS storage with ZFS.

Support 10G network speed.

Other info.

I have a couple of SDDs hanging around in the house that I will use for boot drive.

I am looking for this storage server to offload video scratch files for Adobe Premier Pro / FCP in addition to a 2TB archive of Photos + a growing collection of video footage as me and my kid are both getting into video making. I already got two Samsung 9100 Pro M2 drives for it.

For work I need to get into AI. I have been playing with what they give at work and it is nice and all, but doesnt give me the flexibility to do experimentation to see what works. So I was planning to build an all in one machine with an SP5 based build but that requires CEB / EEB boards / enough space for GPU which means I am basically waiting for the Beast version which seems to be just on the horizon for the past 3 months

So all good points you make. Not enough M2 slots. When I look for Motherboards with 2 M2 Pcie5x4 slots, they go back into server range pricing or AMD AM5 boards with unofficial ECC support only. I dont care about AMD vs Intel.

Any other Motherboard suggestions?

I am trying to get a quote for IMB-X1715-10G though in that one one of the M2 is pcie 4 only.

I’d like to understand better your plan to upgrade the CPU to the Epyc 7272 on the full build. Going back to your comment that this will be “strictly a storage server” the base 7252 option is already more than enough for a NAS. Are you looking for more flexibility for sometime in the future to run containers or something else?

All in all, I’d say that both options will work great as storage servers and will ultimately perform nearly the same as you’ll be bottle necked by spinning hard drives and network speed. I think it really comes down to the value of your time and the longevity of the hardware you’re buying today.

1 Like

If all you want/need is two m.2, you just need to add a single-m.2 riser to the PCIe x4 slot. My point was people doing intensive/professional video editing over the LAN can have fairly sophisticated caching setups so they can scrub quickly without proxies and such. It doesn’t sound like your video work is that extreme. You may have this sufficiently covered for your use case with “4 caddies”, which I assume are to put SSDs into the backplane bays. Although SATA/SAS SSDs aren’t as fast individually as NVMe, in some RAID configurations a pool of them can be.

This is all still irrelevant to this build, right? It is still strictly a storage server? Hyperconverging AI is a whole different discussion.

OK. Perhaps others can suggest cheap ECC options, but the two words “cheap” and “ECC” are usually mutually exclusive. I personally am not obsessed with ECC. I’m storing ‘Linux ISOs’, not brokering financial transactions. I sleep perfectly well at night knowing pool scrubs and DDR5’s level of built-in ECC are sufficient for me.

And? That will do sustained R/W of 5000-7000 MB/s. Even a PCIe 3 NVMe with 3500 MB/s R/W will be able to saturate multiple 10 gigabit (1250 MB/s best case) network connections. You’re not going to notice that difference for a storage-centric workload. If you said “I need 25 gig ethernet” or “the system will have four concurrent 10 gig user connections” that would be different.