I’m trying to map out which IOU to use, and flailing about to get it working, I want it in (slot 5?)

Are you trying to use the card on a HL15 pre-built system or a custom built one? Also what are you trying to do with it? Install an OS to it, have Houston see the card/drives, or something else?

The more details you provide in your issue - the better the community can help out! ![]()

It was the pre-built. Further testing it works in the x16 slot but not the x8 slot. My bet I need to get a power cord for it to be OK in that slot.

I have that carrier card working in slot 3. I did not connect any additional power nor change the BIOS settings as I left everything at AUTO

It recognized the NVMes without any issues. I used “lspci” to confirm that the 4 NVMe slots were present (as part of the HL-15’s hardware).

Feel free to reply here or send a direct message.

2023-12-03T05:30:00Z

There are 4 PCIe slots on the motherboard:

- Slot 6 which is PCIe x 16

- Slot 5 which is PCIe x 8

- Slot 3 which is PCIe x 8

- Slot 2 which is PCIe x 4 (in x8)

You stated you got it working in Slot 6 but what slot did you used for x8. I got it working in slot 3, can you try that slot?

I did order new NMVe sticks. I was going to the carrier board as a separate ZFS cluster or as separate individual drives. When it arrives, I can share more details.

I was trying it in slot 5, and it didn’t show up.

Slot6 worked,

I did not try slot 3.

You got my curiosity.

Here are a few commands that I used to verify the card was recognized. You need to use sudo or run the command as root

dmidecode -t slot

Here is the output from my HL-15:

Getting SMBIOS data from sysfs.

SMBIOS 3.2.1 present.

Handle 0x000B, DMI type 9, 17 bytes

System Slot Information

Designation: PCH SLOT2 PCI-E 3.0 X4(IN X8)

Type: x4 PCI Express 3 x8

Current Usage: Available

Length: Short

ID: 2

Characteristics:

3.3 V is provided

Opening is shared

PME signal is supported

Bus Address: 0000:01:00.0

Handle 0x000C, DMI type 9, 17 bytes

System Slot Information

Designation: CPU SLOT3 PCI-E 3.0 X8

Type: x8 PCI Express 3 x8

Current Usage: In Use

Length: Short

ID: 3

Characteristics:

3.3 V is provided

Opening is shared

PME signal is supported

Bus Address: 0000:68:00.0

Handle 0x000D, DMI type 9, 17 bytes

System Slot Information

Designation: CPU SLOT5 PCI-E 3.0 X8

Type: x8 PCI Express 3 x8

Current Usage: Available

Length: Short

ID: 5

Characteristics:

3.3 V is provided

Opening is shared

PME signal is supported

Bus Address: 0000:ff:00.0

Handle 0x000E, DMI type 9, 17 bytes

System Slot Information

Designation: CPU SLOT6 PCI-E 3.0 X16

Type: x16 PCI Express 3 x16

Current Usage: Available

Length: Short

ID: 6

Characteristics:

3.3 V is provided

Opening is shared

PME signal is supported

Bus Address: 0000:ff:00.0

Handle 0x000F, DMI type 9, 17 bytes

System Slot Information

Designation: M.2 PCI-E 3.0 X4

Type: x4 M.2 Socket 3

Current Usage: In Use

Length: Short

Characteristics:

3.3 V is provided

Opening is shared

PME signal is supported

Bus Address: 0000:04:00.0

As stated earlier, Slot 2, Slot 3, Slot 5, and Slot 6 are the only PCI slots available on the motherboard.

As I only have 1 PCI card installed wtihin my HL-15 via SLOT 3, you can see that the Current Usage denotes “In Use”. The output from the command also shares the Bus Address as 0000:68:00:0

The other slots have them marked as Available.

Using the Bus Address with this command:

lspci -s 0000:68:00.0

The output given was

68:00.0 PCI bridge: PLX Technology, Inc. PEX 8734 32-lane, 8-Port PCI Express Gen 3 (8.0GT/s) Switch (rev ab)

The same information PLX Technology, Inc is displayed in Cockpit/Houston, but the SLOT detail is not included.

I am hoping that helps you.

I can remove this carrier card and confirm that the Slot 3 will return to Available and the device PLX Technology, Inc will be removed from the lspci command.

I am going to use the same diagnostic process tonight as I install a PCI HBA controller as the card needs x8 PCI Gen 3.0 lane.

Hope these linux cli commands can provide you additional help

I finally installed 4 NVMe sticks on my Carrier card.

The NVMe will shown as /dev/nvme1n1, /dev/nvme2n1, /dev/nvme3n1, and /dev/nvme4n1. I am assuming /dev/nvme1n1 and /dev/nvme2n1 for the 2 slot Carrier Card.

The Houston web UI also shows the card recognized within the “45 Drives System”. There is a PCI section that show the cards within a table.

First off I really appreciate you sharing the commands you used to check.

When it worked I was able to use lsblk and see the nvme drives no issue, when it wasn’t, I just moved on to another slot.

Sad truth is, I didn’t have a need for the other slots yet, so I just stuck it in the x16 slot.

No problem. I glad you have the card working.

Have a good Thursday. ![]()

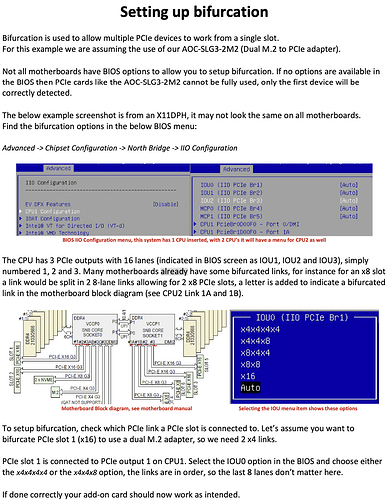

As a supplement reference, I found some good detail in this PDF from “Server the Home” forum site. There was a link to pdf called “Setting up bifurcation.pdf”.

As the document was 1 page, I screenshot the document as a PNG. While this talked about Dual M.2 Carrier Card, the logic is valid with 4 slot Carrier Card.

There are two types of these sorts of carrier boards. The first is the most simple one such as that Asus Hyper m.2. These require bifurcation support on the motherboard and work by simply routing the PCIe traces from the slot to the individual NVMe slots on the card. The board itself has zero or next to zero logic.

The second type is more sophisticated, and that’s what the AOC-SHG-4M2P card is. These use a PCIe multiplexing chip to take some number of PCIe lanes from the motherboard and map them to a greater number of lanes to support multiple 4-lane NVMe devices. In this case, it maps 8 lanes from the motherboard to 16 lanes for 4 gumstick SSDs. There are comically overprovisioned boards you can buy to map even more than just 4 SSDs. See @geerlingguy’s blog post about a board that holds 12.

You can tell when it’s the first type because there will always be some warning about motherboard PCIe bifurcation support. And you can tell the second type because they need a microcontroller and heatsink. And if that’s not conclusive enough, the lspci command output from earlier in this thread shows that it’s a PCI bridge: PLX Technology, Inc...., and PLX is the major (only?) manufacturer of these PCIe switching/multiplexing chips. They do not need bifurcation to work.

The tradeoff should be obvious: you get capacity at the expense of performance.

Thank you for sharing this post from Jeff’s site. I hope you had an old blog episode for this one (as I do not recall one). I do follow his vlogs.

Is birfurcation supported in slot2? I have the two nvme caddy installed there.

The one lane I picked is X4 in X8 form factor. Pulling it from the rack again.

HI @myusuf3, you should not need to change any BIOS setting for the card to work.

As @pcHome mentioned he simply slotted it into slot3 and it detected without issues.

It was in the slot furthest from CPU which is only a 4x

Putting in slots that are physically larger than they’re electrically wired for should be illegal.

its saved me in a pinch for cards that are bigger than they need to be but on need 4X connectivity to function, but yes generally agree there too one less thing to think about.

Anyone able to get the NVMe’s to join a zPool? If I try to create a pool with them, it just gives me an error through the Cockpit ZFS Tab. Using the Terminal, it creates the pool. Not sure what’s up with that!

I got the same error via Cockpit ZFS Tab as well. I have the 4 slot card.

I had not raised any such post as I wanted to compare with others.