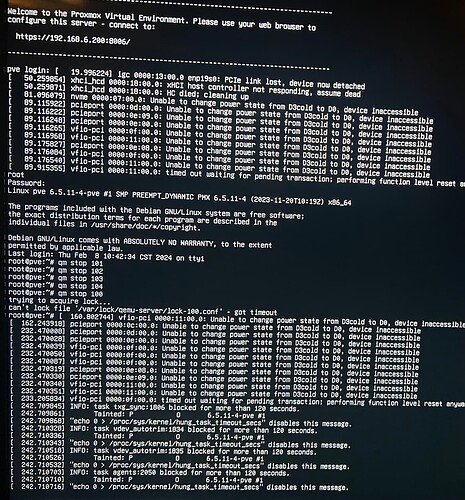

My Proxmox server decided to just absolutely crap the bed this morning, and I was able to pin it down to my LSI card kicking the bucket… rip

I know we all balk at the costs of gear, many times with the argument of “I can just buy a used Enterprise X, Y, or Z for that price”

This feels like it may be the unfortunate side effect of purchasing used gear. Not to say I don’t have half a rack (and the rack itself) of used Enterprise goodies. But I know that I can’t possibly know what its life was like before my lab. Wish SMART or some other duty cycle metric was available for all sorts of IT equipment.

Sorry to hear about your card man. Fortunately, if you’re using software RAID, a la BTRFS, ZFS, or similar, your data is much safer in this event than a HW RAID card dying. And can easily be recovered changing the bad part.

It’s working now, after pulling a nvidia card I wasn’t using out and not using proxmox anymore, as I was trying to pass the lsi card to an unraid vm through proxmox, and the issue seems to be gone… I think proxmox was doing some stuff to the card as it kept trying to load the zfs array on boot instead of letting unraid have the card

I’ve handled hundreds (if not thousands) of LSI cards over the years, and have only had 3 die on me personally, 2 of which were due to a fault of my own (plugging them in to a failed motherboard I’d neglected to label, which shorted the cards), with the only ‘real’ failure being an engineering sample I was testing (shortly after LSI acquired 3ware, was supposed to be 24 port internal / 8 external with a built in expander).

Not saying they can’t be killed, for sure, but they’re usually about the last thing I try to rule out at this point. Glad you got your issue sorted!

yeah, It was proxmox trying to load the zfs array and ignoring my driver blacklisting for some odd reason… nothing to do with the card failing (thank god)