Sorry, I meant VDEVs.

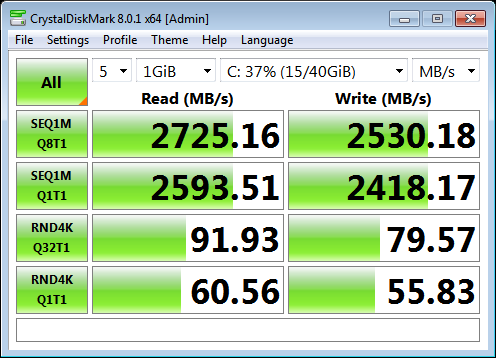

Yes, your CDM test seems odd. You say you’re having issues with both read and write, but from that test, I think your issues are probably just on the write side, unless you have some truly unattainable expectations of read throughput from spinning disks. I’m not sure how to best drill into where the issue is, though. I could throw out all sorts of questions, but they may all be irrelevant. How familiar are you with Proxmox or ZFS? A place to start would be testing outside the VM. In the VM, what sort of Antivirus is running, just the normal Windows Defender? What version of Windows? How full is the ZFS pool?

I’m not saying it is your problem, but typical recommendations these days avoid RAID5/Z1 and either recommend RAIDZ2 for redundancy requirements or a striped/mirror configuration like RAID 1+0 for performance. Also, typically the recommendation is to limit the number of disks in a VDEV to around 8. For small 800GB disks, this probably isn’t as important as someone with 20TB drives, but is something to be aware of. Rules are meant to be broken, and you may have valid reasons for your setup.

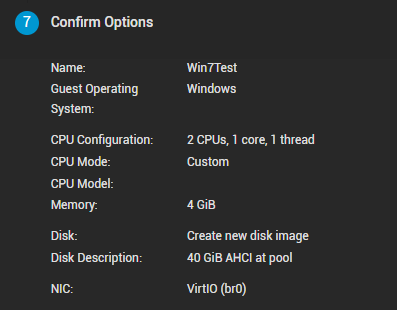

I can’t replicate your system exactly, but I set up a Windows 7 VM on a system running TrueNAS Scale. It has 12x 10TB SATA drives in a single VDEV with RAIDZ2. It also has a 93xx-16i card. I don’t think the other specs are remarkable or relevant. I created the VM basically using the defaults and a Windows 7+SP1 ISO, so it also isn’t remarkable or tuned in any way.

Here is what I got.

You might want to read through this thread if you haven’t seen it already;

https://forum.45homelab.com/t/zfs-write-errors-with-hl15-full-build-and-sas-drives/827

It has some commands you can use in the Linux shell for performance testing. I don’t think the particular failure mode they were experiencing–45Drives-supplied SFF-8643 cables connecting SAS drives to the full build motherboard–applies to you. Although possible problems with the 45Drives-supplied SFF-8643 cables, assuming that is what you are using, is something to keep in the back of your mind, even though you say the pool isn’t going degraded.

I tried to google quickly for issues with Proxmox Windows Guest VM disk performance, but most of what came up were about SATA SSD and NVME drives. I do have Proxmox in the lab, but not enough drives to make a suitable comparison out of it.